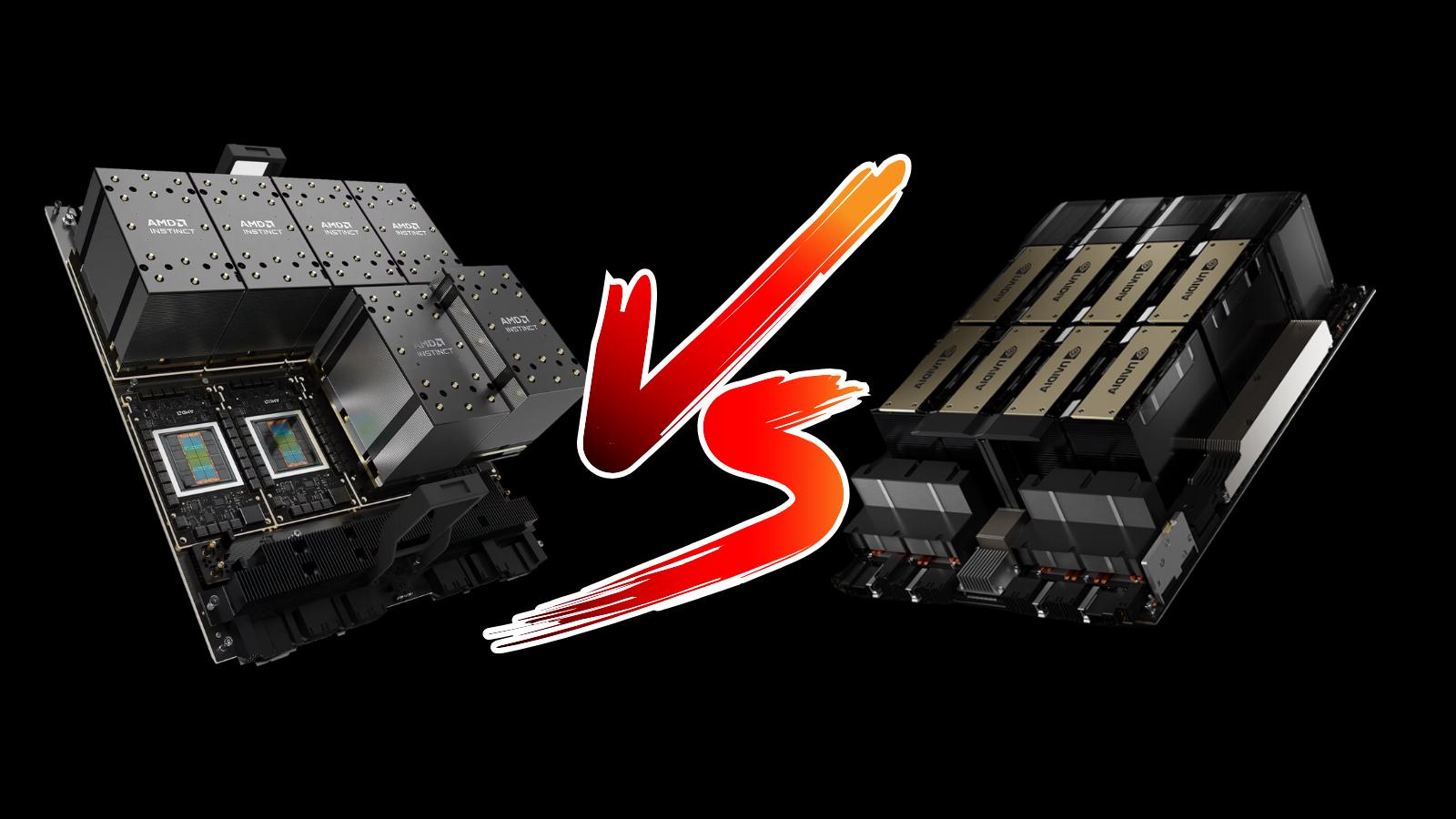

AMD's MI300X Outperforms NVIDIA's H100 for LLM Inference

There has been much anticipation around AMD’s flagship MI300X accelerator. With unmatched raw specs, the pressing question remains: Can it outperform NVIDIA’s Hopper architecture in real-world AI workloads? We have some exciting early results to share.

For the past month, TensorWave and MK1 have worked closely to unlock performance of AMD hardware for AI inference. To start, we focused on Mixture of Expert (MoE) architectures due to their compute efficiency and popularity – notably used by Mistral, Meta, Databricks, and X.ai for their most powerful open-source LLMs.

The initial results are impressive: using MK1’s inference software, the MI300X achieves 33% higher throughput compared to the H100 SXM running vLLM on Mixtral 8x7B for a real-world chat use case. Despite NVIDIA’s software ecosystem being more mature, it is clear that AMD is already a formidable competitor in the AI market. When hardware availability and cost are factored in, the MI300X proves to be an attractive option for enterprises running large-scale inference in the cloud.

We expect AMD’s performance advantage to climb even higher after further optimization, so stay tuned for more updates! - Darrick Horton, CEO TensorWave

Learn more about the announcement here.